General Overview on a Castle Building System

While working on our upcoming game Castle Craft at Twin Earth it became quite obvious that we would have needed a solid and performant system to allow the player to build awesome (and possibly also crazy) looking castles and structures.

After the initial brainstorming it was clear that the classic modular approach was off the table, especially because of the voxel approach the game has (the number combinations of meshes required for such a thing would have been simply insane).

After a few calls with our lead 3D Artist Finn Meinert Matthiesen I proposed we’d try a procedural approach to building things and we both started working on a basic prototype.

The basic idea was to create a sentient voxel (or cube) that could shape shift and “fuse” with the surrounding depending on certain combinations of neighbouring voxels/cubes, but the big question remained: how do we stay away from the countless combinations?

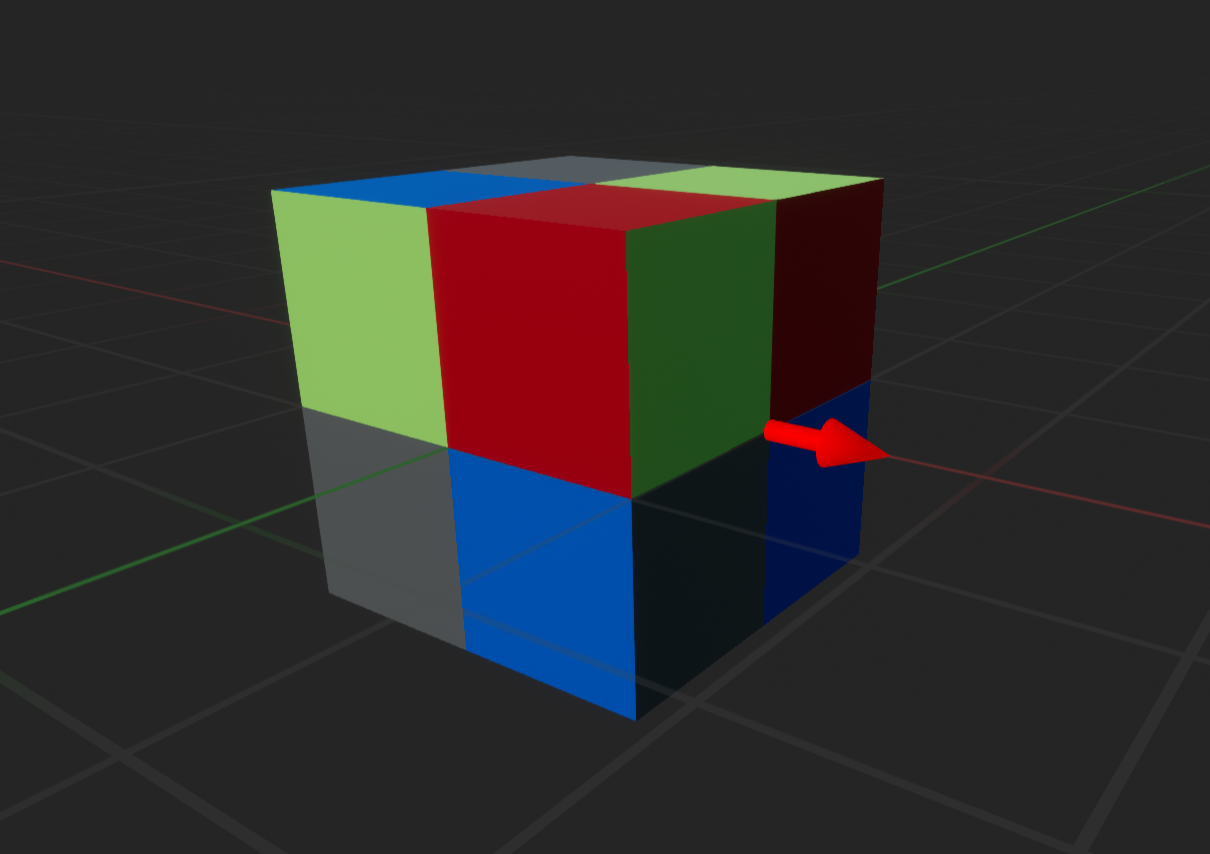

The solution I’ve found was to split the cube face into 4 tiles (for a total of 24 meshes per cube) and have each of these tiles swapped depending on specific combinations of neighbours.

This way we could keep the number of meshes low but still be able to create interesting variations. So Finn started working on a first set of meshes that we could put together and fit the cube faces while giving the cube a more interesting look, especially when placed next to other cubes.

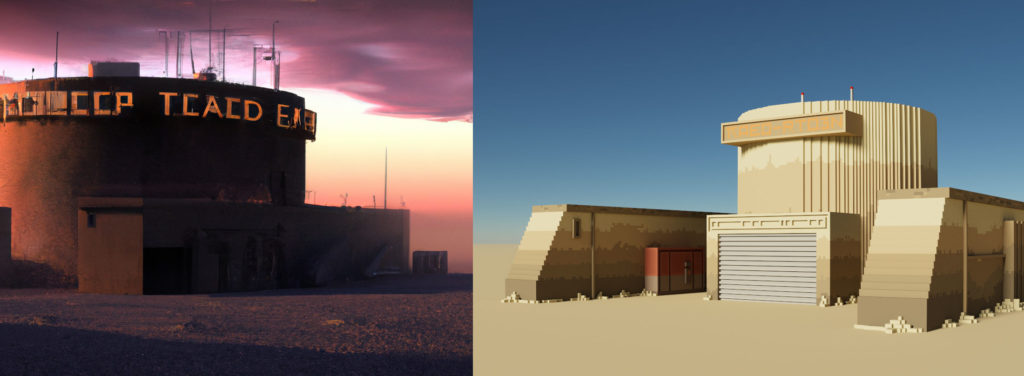

The first bunch of meshes Finn came up with looked pretty much like this:

Here is an example of the cubes that could potentially be built with just that minimal amount:

This solution introduced some factors which had to be taken into account though, the first is that while the types of meshes used were minimal, the number of meshes rendered on screen per cube would definitely increase from 1 to 24! Also how would the cube be able to detect its neighbours? And how would the different meshes be picked ?

My first attempt was to make the cube an actor and use line traces / box traces to detect the neighbours. Needless to say, although the behaviour was correct, it miserably failed.

The number of draw calls was quite high and having tons of those actors performing line traces is no small feat, even for the best CPUs and GPUs.

On the bright side, this attempt was very useful for me to test a system that could determine what type of mesh should be used and where.

The main idea was to detect the mesh needed, for each of the 24 tiles, by checking their surrounding tiles configuration.

The configuration would be the presence (or absence) of neighbouring tiles around a given one.

For example if only tiles 3, 4, 5 were present around this tile then the tile itself would be the top left corner. Likewise, if 3, 4, 5, 6, 7 were present, it would be a top edge.

Figuring out the other rules was pretty straight forward.

Once the rules were in place the cube was already capable of merging with the surrounding ones pretty nicely. As a safe measure the cubes rotation is also taken into account so that cubes with different rotations can still merge.

This was the success of this initial iteration. A set of rules had been defined, and they worked quite well too!

With that in mind the challenges left were purely on the technical side but fortunately for us Unreal Engine has quite the number of tools at our disposal to deal with that.

The first matter to tackle was the many draw calls when using static meshes and Unreal provides a really handy class that helps overcoming that burden : Instanced Static Mesh (ISM)

ISM components have some limitations though, one of them is you can’t have individual LODs for each instance. Luckily, its subclass, Hierarchical Instanced Static Mesh (HISM) components do allow LODs per instance, so a champion had been found.

Using HISM requires a completely different class hierarchy so the idea of having actor cubes had been benched (but this is good since the AActor class has quite an overhead and we want tons of cubes right?).

The new setup had to be a single actor handling the cubes generation via HISM components which should provide different pieces when required but what should the cubes be then?

I opted for pure data and used UStruct (UObject was also a candidate, a very good one at that, but introduced a bit of overhead and the goal was to have tons of cubes).

This new setup turned out to be way more complex as line traces/box traces were not exactly convenient to detect a cube’s neighbours so I introduced a global grid and had more calculations to make. Having a grid not only eliminated the need to use line traces of any sort but also gave the tools to query the cube’s neighbours in many more ways (this comes pretty handy when talking about damage propagation etc.).

What’s Next?

What would you like to hear about next? A more specific view into the current system? Damage system? Cube preview (assets generation)? Solutions to shadows/collision? Let us know!